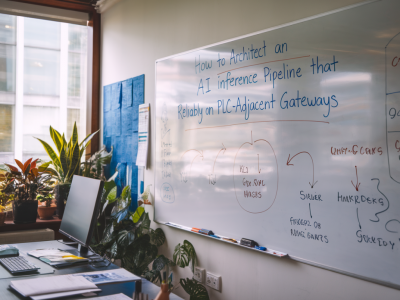

How to implement hot failover for siemens s7 controllers across two plants without buying a forklift of spare hardware

Why I tackled cross‑plant hot failover without a hardware shopping spreeI've been on the shop floor when a single PLC failure stopped two downstream lines and forced a weekend of overtime and...

Read more... →