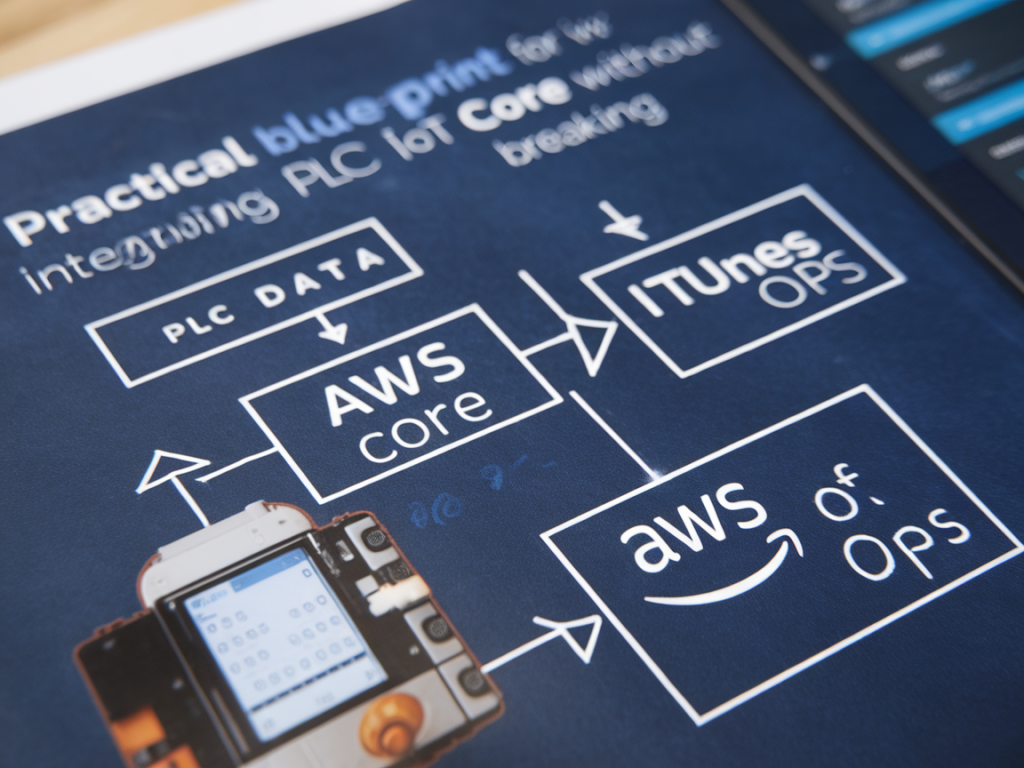

I want to share a practical, no‑fluff blueprint I use when integrating PLC data into AWS IoT Core while keeping operations teams comfortable and systems stable — or as our ops folks joke, “without breaking the iTunes of ops.” This is a pragmatic guide based on fieldwork across automotive and process plants where the stakes are uptime, quality and safety. I’ll walk through architecture choices, data modelling, security, edge considerations, and rollout tactics that smooth the handoff between OT and cloud.

Why this matters (and the usual friction points)

Bringing PLC telemetry into the cloud unlocks analytics, predictive maintenance and cross‑plant visibility. But in practice you hit three recurring problems:

- Ops risk: Direct changes to PLC code or network topology to support cloud connectivity often trigger fear — rightly so — because it can affect safety and uptime.

- Data noise: PLCs produce high‑frequency, verbose signals. Sending everything to the cloud is expensive and creates downstream processing headaches.

- Model mismatch: Cloud teams want normalized, timestamped, schematized events. PLCs are tag lists, memory maps and cyclic scans.

My approach is to put a small, deterministic edge layer between the PLC and AWS IoT Core that preserves operational integrity, shapes data, and enforces secure, observable flows.

Reference architecture — the safe, pragmatic path

At a high level, I recommend the following components:

- OT network: PLCs, HMIs, and control VLANs remain unchanged.

- Edge gateway: industrial PC or certified IoT gateway that speaks PLC protocols (Profinet, EtherNet/IP, Modbus TCP, OPC UA).

- Edge runtime: containerized data collector (e.g., Kepware, Ignition Edge, Node‑RED, or a lightweight custom agent) that performs local filtering, aggregation and normalization.

- Secure outbound tunnel: TLS mutual auth to AWS IoT Core (MQTT) or via AWS IoT Greengrass for local compute and device shadows.

- Cloud ingestion and processing: AWS IoT Core → rules to Kinesis/Data Streams, Lambda, or TimeStream/S3 for long term storage and analytics.

This keeps PLCs untouched, centralizes protocol conversions at the gateway, and provides a single, hardened egress point for cloud connectivity.

Edge responsibilities — what I always implement

The edge gateway should be more than a protocol translator. Here’s the checklist I use:

- Non‑intrusive read mode: Use client polling or OPC UA read not write. Never require PLC program changes.

- Data shaping: Implement sample rate down‑selection, change‑of‑state filtering, and statistical aggregation (min/max/avg) to reduce cloud traffic.

- Schema mapping: Map raw tags to a canonical telemetry schema with metadata: asset_id, tag_id, units, engineering limit, reliability score, factory_zone.

- Local buffering and store‑and‑forward: Handle network loss with journaling to SSD and retry logic to avoid data gaps.

- Local compute/ML for latency‑sensitive use cases: Run lightweight models with Greengrass or containerized inference when immediate action is needed.

- Observability: Expose health metrics for gateway CPU, PLC polling latency, queue depth and last successful cloud publish.

Security and compliance — the non‑negotiables

Security often determines whether ops will sign off. I stick to these rules:

- One‑way outbound only: Configure firewalls so the gateway initiates all outbound connections. Avoid inbound rules that allow cloud to directly reach PLCs.

- Mutual TLS and least‑privilege IAM: Use X.509 device certificates for AWS IoT Core and AWS IoT policies scoped to required topics and actions.

- Network segmentation: Place the gateway in a DMZ that can reach PLC VLANs via read‑only SCADA interfaces and the internet via an approved egress route.

- Audit trails: Log every change to gateway configuration, and use AWS CloudTrail and AWS IoT Device Defender where possible.

Data model and topic strategy

Designing topic namespaces and payloads pays dividends. I recommend:

- Hierarchical topics: factory/site/cell/asset/telemetry — this simplifies subscription rules and security scoping.

- Two payload types: fast telemetry (compact, high‑freq numeric points) and event/state messages (richer JSON for alarms, recipes, and quality checks).

- Versioned schemas: Add a schema_version field so consumers can evolve without breaking producers.

| Use case | Topic | Payload |

|---|---|---|

| High‑freq sensor | factory/site1/cellA/motor01/telemetry | timestamp, tag_id, value, quality |

| Alarm | factory/site1/cellA/motor01/alarms | timestamp, alarm_code, severity, text, operator_id |

Choosing between direct MQTT vs. Greengrass

I typically choose AWS IoT Core MQTT for straightforward telemetry and AWS IoT Greengrass when:

- Local decision logic is needed (safety interlocks, emergency stop coordination).

- Connectivity is intermittent and local actions must continue.

- Edge ML inference is required with model lifecycle management.

Greengrass adds complexity and an operational footprint, so I only introduce it when the use case demands local compute or device shadow sync beyond simple telemetry.

Operationalizing and winning buy‑in

Technical design is only half the battle. To avoid cultural resistance and ops headaches I follow a rollout playbook:

- Pilot small: Start with a non‑critical cell and map current manual processes to cloud outcomes so ops see tangible benefits (reduced downtime, less manual logging).

- Co‑design with ops: Bring control engineers into schema and poll‑rate decisions. Make them owners of gateway health dashboards.

- Immutable PLCs: Commit to not changing PLC logic in pilot phase. Any necessary control changes go through normal PLC change control processes.

- Runbooks and rollback: Create clear runbooks for gateway failures and a tested rollback plan to cut the cloud link without impacting control loops.

- Measure what matters: Define KPIs — mean time to detect anomalies, data completeness, cloud cost per tag — and monitor them during pilot and scale phases.

Tools and products I use

Concrete tools save time. My go‑to stack includes:

- Kepware or Inductive Automation Ignition Edge for protocol bridging and tag mapping.

- A lightweight agent (custom Go or Node.js) when I need precise control over filtering and retry logic.

- AWS IoT Core with managed MQTT, AWS IoT Device Defender for security monitoring, and Kinesis/Firehose + S3/TimeStream for storage.

- AWS IoT Greengrass where local compute and model inference are required.

Using vendors that are common in manufacturing (Kepware, Rockwell, Siemens) helps the operations team feel comfortable — interoperability with their existing tools matters more than theoretical elegance.

Common pitfalls to avoid

From my deployments, here are mistakes that bite hardest:

- Sending raw PLC scans to the cloud — leads to cost blowout and noisy models.

- Changing PLC programs for connectivity without following change control — it breaks trust.

- Not planning for time synchronization — inconsistent timestamps ruin correlation and analytics.

- Underestimating lifecycle ops — edge devices need patching, certificate rotation and monitoring just like PLCs do.

If you want, I can publish a downloadable checklist or a sample CloudFormation/IoT policy template to accelerate a pilot at your site. Practical, deployable artifacts make the difference between theory and a successful, low‑risk integration that keeps ops smiling.